RAG Implementation has become a foundational approach for enterprises aiming to deploy AI systems that are not only powerful but also reliable, explainable, and scalable. As generative AI adoption accelerates, organizations are quickly realizing that standalone large language models are not enough for real-world business use.

In enterprise environments, AI must work with internal knowledge, comply with governance rules, and deliver consistent value. This is exactly where RAG Implementation proves its importance.

The shift from experimental AI to enterprise-grade systems

Many companies begin their AI journey with impressive prototypes. Chatbots answer questions fluently, internal demos look promising, and early excitement builds quickly. However, once these systems move closer to real users, limitations start to appear.

Generative AI models can hallucinate, provide outdated information, or respond inconsistently. In enterprise settings—where decisions impact revenue, compliance, or customer trust—these issues are unacceptable.

RAG Implementation addresses this gap by grounding AI outputs in trusted enterprise data, transforming experimental AI into production-ready systems.

At NKKTech Global, this transition from “AI demo” to “AI system” is where most projects succeed or fail.

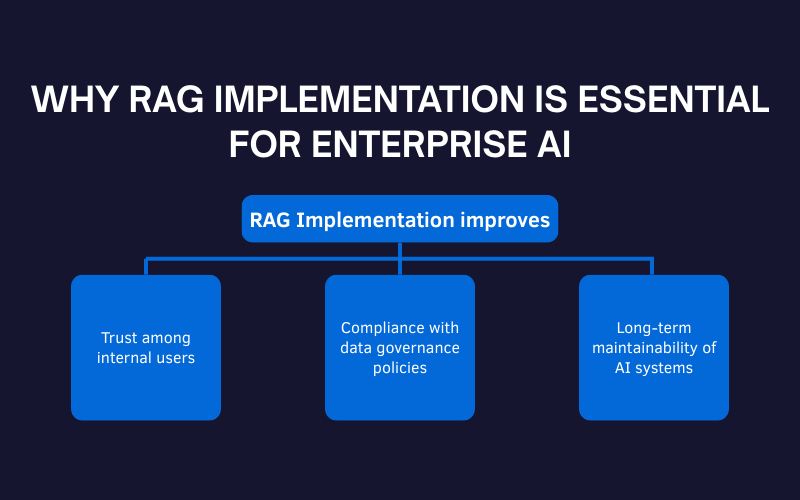

Why RAG Implementation is essential for enterprise AI

RAG Implementation is not simply a technical enhancement. It is an architectural decision that directly affects how AI behaves in business-critical environments.

By combining information retrieval with generative models, RAG ensures that AI responses are based on verified documents, internal databases, and structured knowledge sources. This creates a clear audit trail for where information comes from—an essential requirement for enterprise adoption.

Beyond accuracy, RAG Implementation improves:

- Trust among internal users

- Compliance with data governance policies

- Long-term maintainability of AI systems

These benefits explain why RAG is increasingly considered a default pattern for enterprise AI architectures.

Common enterprise challenges RAG Implementation solves

One of the most widely discussed risks of generative AI is hallucination. Even when models sound confident, the underlying information may be incorrect or irrelevant. RAG Implementation significantly reduces this risk by forcing the model to retrieve real data before generating a response.

In addition, enterprises benefit from:

- Faster knowledge updates without retraining models

- Better control over what data AI can access

- Easier scaling as data volume and usage grow

From NKKTech Global‘s experience, these capabilities are critical when AI systems move from pilot projects to company-wide deployment.

Designing a realistic RAG Implementation roadmap

Successful RAG Implementation does not start with tools or frameworks. It starts with a clear understanding of business needs and constraints.

Step 1: Define a focused business use case

The most successful RAG Implementation projects begin with one clear problem. Examples include:

- Internal AI assistants for employees

- Document search and summarization tools

- Customer support knowledge systems

Trying to solve multiple problems at once often leads to complexity and unclear results. A focused scope ensures faster validation and measurable impact.

Step 2: Prepare and structure enterprise data

Data preparation is often underestimated, yet it determines most of the system’s quality. Documents must be cleaned, chunked logically, and enriched with metadata to improve retrieval accuracy.

At NKKTech Global, data readiness is treated as a strategic step—not a technical afterthought.

Step 3: Build the RAG pipeline and integrate workflows

Once data is ready, the RAG pipeline connects retrieval mechanisms with generative models. At this stage, integration with existing systems is critical.

Enterprise AI must fit naturally into workflows, not disrupt them. Monitoring, logging, and feedback loops ensure the system improves over time.

Operating RAG systems in production

RAG Implementation does not end at deployment. Real value comes from continuous evaluation and optimization. As user behavior changes and data grows, retrieval strategies and prompts must evolve.

This operational mindset is what separates long-term enterprise AI success from short-lived experiments.

NKKTech Global’s approach to RAG Implementation

What differentiates NKKTech Global is a strong focus on business alignment. Every RAG Implementation project is designed with scalability, governance, and long-term ROI in mind.

Rather than optimizing only for model performance, the goal is to build AI systems enterprises can trust and expand confidently.

Conclusion

RAG Implementation is the key to making generative AI work in real enterprise environments. By grounding AI responses in internal knowledge, organizations gain accuracy, trust, and control.

If you are planning to deploy enterprise AI systems, NKKTech Global can help you design and implement RAG architectures that are ready for production from day one.

Leave a comment or reach out to discuss your enterprise AI use case

Contact Information:

🌎Website: https://nkk.com.vn

📩Email: contact@nkk.com.vn

📌LinkedIn: https://www.linkedin.com/company/nkktech